Clustering Methods in Automotive Data Science – An Overview

Clustering is one of those buzzwords that like to float around the room as soon as topics like machine learning, data science in general and highly complex data are discussed. If we receive large and complex amounts of data via Big Data, we must first be able to understand and evaluate them before they have any concrete use for us. Clustering, along with its "big sister" classification, forms one of the big umbrella terms for such machine analysis techniques and helps us gain initial insights into the data and identify any patterns that may exist. Since both topics are complex and we don't want to shortchange either of them, this article covers clustering and three of the most common clustering techniques. In the follow-up article, which we will link here accordingly, we will cover classification and its best practices in more detail.

We hope you enjoy our overview of the most important clustering methods in the field of Data Science Automotive and perhaps experience an "aha" moment or two.

- Clustering vs. classification - With examples

- What is clustering, and when do I use it?

- Hierarchical clustering - clusters on multiple levels

- k-Means - The Godfather of Clustering for Big Data

- Clustering with DBSCAN - When center and density are searched for

- Clustering Methods in Data Science - The Conclusion

Marc

Marketing Professional

12.05.22

Ca. 18 min

Clustering vs. classification – With examples

Let’s catch up a bit – it’s not for nothing that our age is sometimes called the “data age.” Data is essential, ubiquitous and sometimes available in incredible mass. In the automotive sector, data collection is essential to create safe, functional and advanced products, and it starts long before the factory floors of car manufacturers, often called “OEMs” (“Original Equipment Manufacturers”). The supplier industry provides hardware and software, develops, researches – and collects data, evaluates it and prepares it in the way the OEM wants.

Since data volumes are enormous thanks to Big Data (see also our article on “Big Data Pipelines“), it is a task of Data Science to segment and exploit them. But where to start? And by what method? Classical machine learning basically knows two methods for this: Clustering and classification. Classification is one of the “supervised” learning methods, which means that the data used must already have labels (i.e., each already belongs to unique categories). Roughly speaking, without claiming an exact percentage, it can be said that around seventy to eighty percent of the methods used in industrial machine learning are supervised learning methods. Clustering is therefore used less frequently, but it has the great advantage that, as an “unsupervised” process, it is not dependent on pre-existing labels. This can be helpful, for example, when analyzing errors in error logs when the type and cause of the errors are still largely unknown (these would be the labels in supervised learning), but correlations can already be found in various error images.

Clustering is about finding structures and patterns in unlabeled data. However, since there is no real “right or wrong” compared to classification due to the lack of labels, domain knowledge and critical questioning of the results of the algorithms is of particular importance here. With this knowledge in mind, we will discuss three of the most popular clustering methods in a little more detail in the following section.

What is clustering, and when do I use it?

When deciding whether classification or clustering is the right approach for a problem, the focus is primarily on the specific problem. “In the past, you did supervised learning, you needed training data for that. Today you just do unsupervised learning, then you don’t need the training data.” You’ve probably heard it that way or something similar when it comes to clustering versus classification. While there may certainly be a spark of truth here, both methods are simply made for different problems.

Let’s imagine we are a company trying to segment our customers into different target groups. The data is available in the form of visit logs on our website. Now we have two options: On the one hand, we could think in terms of traditional categories and sort our visitors by age, gender, and so on. In this case, classification would probably be the appropriate choice. But what if our visitors exhibit different patterns of behavior that cannot be explained by these predetermined categories? This is where clustering comes in, because it allows us to uncover exactly these unknown relationships. Thus, clustering is also suitable as a preliminary stage for supervised processes, because based on these new findings, they can often be set up very well.

But how can such a clustering look like? Let’s try to imagine the whole thing a bit more pictorially by using examples: No matter what form the data is in, whether it is text, error log, or many, many numbers: We imagine these data as pieces whirling around in the universe. In machine learning, we ask ourselves: where are my points? Where are my suns, my planets, and where do they “clump” together into “galaxies” or “globular clusters”. In a conventional two-dimensional space, like a drawing, we can see this immediately. In three-dimensional space, like a computer game, we could rotate the space and the data and also see the status quo. In a multidimensional space with 64 dimensions, however, there is no longer any insight. Using the practical example of a fault memory, such dimensions would be how often the fault occurred. When exactly? What accompanying data comes with it, what is the mileage, vehicle data and much more? This gives us numerous dimensions – without a true spatial dimension, of course – so overall multidimensional space, in which we now begin to cluster the data.

There are various methods for this, but we will discuss three of the best-known methods below.

Hierarchical clustering – clusters on multiple levels

One of the most well-known and frequently used clustering methods is the so-called Hierarchical Clustering: one of the simplest methods to cluster data. For a pictorial comparison, we can imagine that the data has the rough structure of a tree, whose leaves are the respective data points and which are interconnected via branches and twigs up to the trunk on several levels. The question now is: Which leaves belong to which branch, which twigs to which branch, and where do the branches dock to the trunk? To answer this question, all leaves are first considered as a separate, small cluster. The two most similar leaves are then assigned to the same branch, forming a new cluster containing the two leaves. In this way, the clusters grow in each step until, in the end, all the leaves are linked together at the stem. The beauty of this approach is that each layer can be viewed individually, allowing nested relationships to be identified.

But how does the algorithm know when two leaves form a new cluster, or one leaf at a time is assigned to an existing cluster? There are different “linkage” approaches for this, such as “average linkage” where the similarity between the new leaf and the “average” leaf of the previous branch is considered. Alternative approaches, on the other hand, target the most similar leaf located on the branch (“single linkage”) or the most dissimilar (“complete linkage”).

To cite another example, the image of a DNA helix could be visualized. From a geometric point of view, it is primarily two bands that wrap around each other. Other methods such as the k-Means discussed below would be unsuitable here, since this would practically “cut through the middle”. Single Linkage Hierarchical Clustering, however, would be a good way to identify this data structure, since it says – in simplified terms – “If A is an existing cluster, and point X is very close to that cluster, this point will belong to it.” This allows the clustering to gradually work its way forward and identify even complex structures until all similar points have been grouped together and a cut can be made to see at what point the clustering has ended.

k-Means – The Godfather of Clustering for Big Data

The headline anticipates it: clustering via k-Means is considered the most famous method in clustering because it is easily scalable and parallelizable, i.e. it is especially suitable for clustering huge amounts of data. Above all, the possibilities of running clustering in parallel via k-Means – in some cases on hundreds of high-performance computers – and assembling the result at the end is an advantage that cannot be dismissed for large volumes of data.

Unlike the stepwise construction of nested clusters in Hierarchical Clustering, in k-Means we look for so-called “centroids”, so named prototypes for the respective clusters. Sounds a bit like Machine Learning Star Trek (“The centroids are assimilating instead of labeling, Captain k-Means!”), but is practically a “performance” that is representative of a certain kind of data and subsequently searches for the next prototype, which is always recalculated and iterated based on the real data points. For the philosophers among the readers, Plato’s Allegory of Heights and the “idea” of things may be quoted, although the scientific reality is admittedly more complex than our popular scientific approach.

The process of k-Means is as simple as it is intelligent: Starting from randomly chosen “starting centroids”, all points are assigned to their nearest centroid (and thus to a cluster). Based on this assignment, new centroids are then calculated as the average of the current cluster. This procedure is repeated until the assignment of points to centroids no longer changes.

Clustering via k-Means always works well when the data structure is arranged in a kind of “globular cluster”, of approximately the same diameter and similar density, which optimally also do not overlap to any great extent.

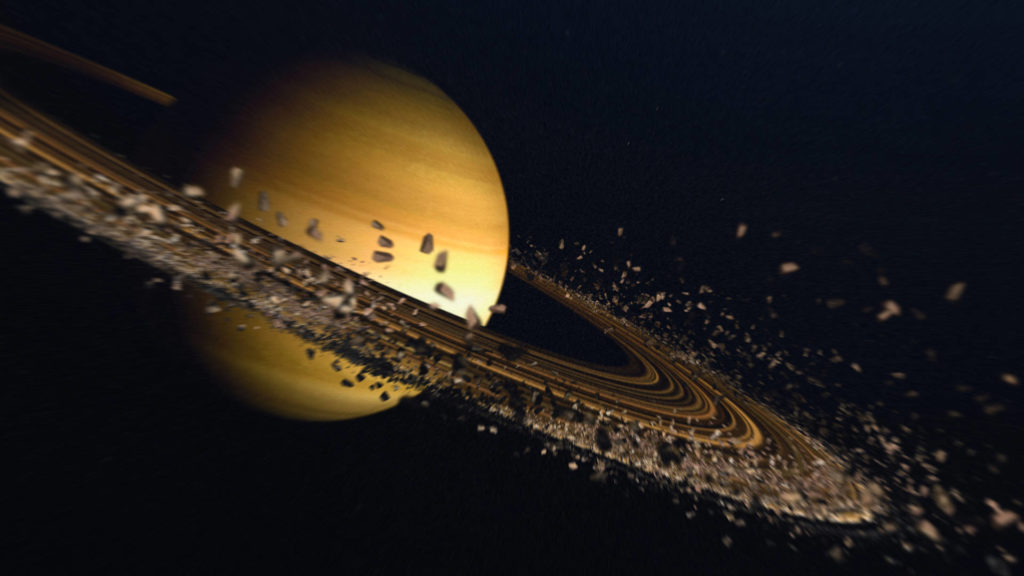

Not a good fit for k-Means if the clusters are of different sizes. Where the naked eye can easily perceive size differences, k-Means cannot cleanly separate here, simply because the underlying algorithm cannot do it that way. An example often used in Data Science or Machine Learning is that of a Mickey Mouse head, i.e. three circular clusters, one large one for the “head”, and two smaller ones for the “ears”. Even with an optimal choice of random “starting centroids”, the final clusters may not correspond to these three “obviously” recognizable ones. Instead, if necessary, some points belonging to the “head” are assigned to the “ears”, because they are closer to the other centroid due to the size difference of the respective clusters. Another example would be the planet Saturn, with a thick cluster in the center and a ring-shaped cluster around it. k-Means would not be able to interpret this and would possibly cut the data cluster wrongly in the middle of the planet – for such structures, you guessed it, Hierarchical Clustering with Single Linkage would be much better suited.

Also important when using k-Means is to pay attention to and check the interpretability of the clusters. Often k-Means is regarded as a supposedly hardly fallible magic tool, but especially for large projects it is essential to look at the clusters again and again to see if they can really turn out that way from a logical point of view, or if k-Means gets into interpretation difficulties. Visualization and dimensionality reduction techniques are also essential for this. One of the absolute advantages, besides scalability, parallelization and handling of huge amounts of data, is that it can be searched for “k” different clusters (hence the name). Since it is not necessarily known beforehand how large this “k” must be, a wide variety of cluster arrangements can be searched for and summarized at the end, or it can be checked which “k” can best explain the data (we do not explain, but throw into the room that the “elbow method” plays a role here).

Clustering with DBSCAN – When center and density are searched for

Last, but not least, of course, we take our look at DBSCAN as a third clustering method. DBSCAN does not stand for a frequently late public transport scan, but for a density-based clustering method (we will spare you numerous silly word jokes here, but with this impulse we are surely already stimulating your imagination!)

This method sets a center point in high dimensional space and from here searches the points in the immediate vicinity where certain structures can be found. The special feature that DBSCAN brings to the table is the rough operating principle by means of which a center with radius and density structures can be identified. Clusters are sets of points that are “density-connected”. This means that a point is either “dense” itself, i.e. a correspondingly large number of other points can be found within a certain radius, or a point is located in the immediate vicinity of such a dense point.

From the previous description you can see that DBSCAN can explicitly detect “noise” as well, namely points that are not “density-connected”. Where hierarchical clustering and k-Means necessarily assign each data point to a cluster, DBSCAN can identify data points that do not belong to any cluster and, in a sense, are merely “noise along”. This can therefore be an excellent way to find the data that really matters in the end, rather than just assigning data to existing clusters and thus overlooking data points that do not fit into any of the grids found. DBSCAN can thus isolate and find data points without clusters and without many close partners, which is not possible in other clustering methods because it is simply not provided for in their models. The Mickey Mouse head mentioned earlier could therefore be much better recognized by DBSCAN and determine which data points belong in the structure and which do not (but which can still be of importance).

Clustering Methods in Data Science – The Conclusion

With this article you have gained a first overview of which clustering methods are common, how they work – simplified conveyed – and when they can be ideally used. We owe these insights into the complex field of industrial machine learning to the expertise of our data science team, which includes contact persons from Dr. Daniel Isemannwhich we thoroughly sounded out for this post – an appointment after which we felt simultaneously wiser and a bit dumber, having learned a lot, but also realizing what enormous dimensions data and its processing have taken on these days.

It is therefore all the more important that OEMs look for reliable partners with a high level of expertise and project experience in order to handle large projects effectively. Otherwise, the danger of searching for the proverbial needle in a haystack in the course of data segmentation is all too high, with the additional challenge that the needle is the size of a speck of dust, while the haystack is the size of a planet. In order to avoid costs and unnecessarily long development times, OEMs from the automotive sector as well as from other industries can contact us for an exchange of expertise or initial consultation. Finally, Cognizant Mobility, the parent company of the Mobility Rockstars, is also working outside the box and has already completed visionary projects in medical technology, for example.

It’s also best to follow us on LinkedIn and/or subscribe to our newsletter so that you don’t miss other exciting articles, such as the partner article on classification.